By Mahesh Patil, Sr. Technical Director, PernixData

Virtualization and storage problems have become synonymous. We all have experienced the joy of virtualization first hand. A virtualized infrastructure is a great productivity enhancer for the IT organization not to talk about all the added benefits of managing a virtualized infrastructure – but once you get past the rosy honeymoon, the ugly underbelly of virtualization starts showing up. Storage performance bottlenecks! Slow storage, non-deterministic storage performance, lack of insight into which virtual machines will get impacted, which workload is going to cause the storage to keel over and frustrated end users. We are all too familiar with this storyline.

Shared Storage and Virtualization

If one thinks about it, the reason for these performance problems in a virtualized infrastructure has its roots in all the great benefits that one enjoys from such a system. A virtualized environment is built around the concept of shared storage. It was not an accident but an active choice that led to this architecture of shared storage. What do you think lies at the crux of a feature like VMware virtual machine VMotion? VMotion allows virtual machines to be seamlessly moved around your infrastructure. But VMotion is built with a requirement of shared storage amongst the hosts where the Virtual Machine moves. So shared storage is not an option for the administrator – it is the only choice they have. And as night follows day, a shared resource of any kind is followed by contention on that resource. Unfortunately IT administrators are facing the brunt of end user frustration as a result of this choice. This problem is further exacerbated by the limited choice that is available to the administrator when it comes to storage solutions. Most traditional shared storage solutions are modeled around building a bigger and faster box. But is this the right choice? Does this actually help solve the problem?

Making the Right Choice

The database world is going through a similar problem where a traditional DB is not able to handle all the requirements of new age apps and we have seen a proliferation of various scale-out data solutions that have been introduced to address this problem. Although the virtualization world has been slow to catch on, we have seen similar scale-out based approaches being offered by virtualization storage vendors. Just as in the database world, these scale-out solutions have the potential to revolutionize the storage world and offer much needed relief to the IT administrators. However, not all solutions are created equal – and it is important that IT admins understand the different kinds of scale-out solutions available so that they can make the right architecture choice for their environment.

Scale-Out

Scale-out, as opposed to scale up, has the promise of allowing a solution to grow with the number of hosts in the cluster, but very often we see solutions that fail to live up to this promise. Why is scale-out hard? Well, there are multiple reasons why scale-out is hard and although the specifics of each solution are different, the common theme is that multiple hosts means multiple copies of data, and multiple copies means they need to be kept coherent or consistent.

The price of keeping the copies coherent, henceforth referred to as doing “cache coherency”, goes up as you traverse down the following list:

- Immutable objects

- Mutable objects. Single Reader, Single Writer (Single-RW)

- Mutable objects. Multiple Readers, Multiple Writers (Multi-RW)

The cost of keeping immutable objects consistent is negligible. For mutable objects, as more and more writers are added to the mix the system has to start exchanging messages over the wire to keep the copy of the object consistent. And more often than not, the number of these messages goes up exponentially as the number of hosts in the cluster increases, i.e. cost of doing cache coherency increases with the number of hosts in the cluster. As we shall see, not all problems warrant a Multi-RW cache coherency solution and your ability to identify the problem and choose the right solution has ripple effects on system performance, complexity and stability.

Let’s take a look at couple of examples. The first example is of a system that needs “Multi-RW” cache coherency and the second is an example of a problem that needs “Single-RW” cache coherency. Both systems are scale-out in their own way.

Example 1

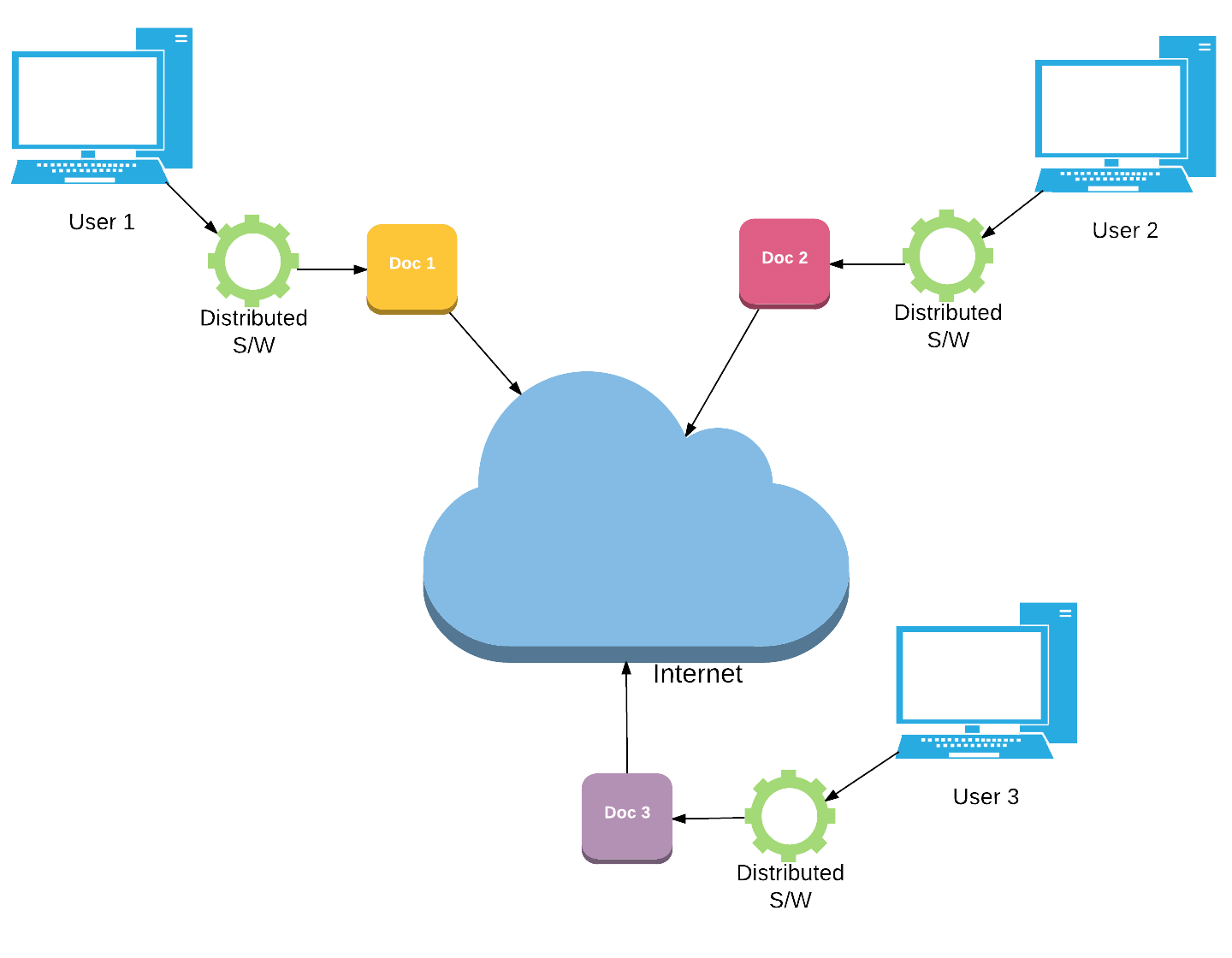

- A distributed file editing system.

- Multiple users geographically distributed.

- Multiple users can share and edit the same document at the same time.

- Updates to the document are visible to all the users almost in real time.

- User latency is expected to be comparable to editing a document on the local desktop.

If latency is the key metric on which the system is going to be judged, then the system starts looking like a distributed system shown above where the software allows the user to modify the document locally, while in the background it is trying to reconcile the changes made by multiple users. In effect, to ensure Multi-RW cache coherency the system has to exchange messages amongst the various hosts to keep the copy of the document up to date. In addition to the Multi-RW cache coherency algorithm, the system is also trying to solve:

- Consistency – How are the updates viewed by the various users? There are various models one can choose: fully consistent, eventually consistent, ordered, etc. If a user sees one update before another, then every other user should hope to see it in the same order.

- Partition – How does the system behave if a network partition causes some users to be disconnected from the rest of the users?

- High Availability – Data may have to be replicated for resiliency.

Now, there are well-known algorithms or solutions for each of the above. Most of these solutions are all walking the tightrope between performance, correctness and availability. A number of times one impacts the other e.g. a highly performant system may not be fully consistent. The various tradeoffs associated with different solutions have non-trivial performance impact – not to say anything about the complexity of the algorithms and the task of getting them implemented correctly.

Example 2

- A file editing system

- Multiple users are geographically distributed.

- Each user can edit only his or her own document.

- Users can move around from one location to the other, but still only access their own files.

- User latency is expected to be comparable to editing a document on the local desktop.

In this particular example, since one and only one user modifies a document, this system can simply use a cache coherency algorithm built for Single-RW. This algorithm does not have to exchange messages with other systems to keep the document up to date. Let’s also look at the other problems that the solution from “Example 1” was trying to solve.

- Consistency – Since a user only updates his or her own document, the copy of the document is always consistent.

- Partition – A network partition does not impact users the same way because as long as the user can work on his or her document, they should be able to make progress, so this becomes trivial as well.

- High Availability – Similar to “Example 1,” data has to be replicated for resiliency.

I hope you start to get a sense that building a distributed scale-out solution for this use case is a different proposition than one for “Example 1”. One would also start getting a picture of why this particular problem is well suited for a scale-out solution that can be built for low latency.

Virtualization Cache Coherency

Virtualization workload falls into the same category as Example 2 above. Lets take a look at how.

As seen above, each VM is reading and writing to its own disk. There is no crosstalk when it comes to VM I/O traffic. When a VM VMotions from one host to the other, it is still only interested in its own disk. This is an important observation. Virtualization needs only a single RW cache coherency solution for most deployments. Now, it is true that a solution that addresses multi RW cache coherency can be used but the question that the administrator should ask is. Do you need such a solution? With an understanding of this basic difference between various scale-out solutions, hopefully the IT administrator can evaluate the pros and cons of each approach both in terms of performance benefits and complexity of the solution.

Scale-Out Solutions

Let’s look at the typical scale-out solutions available to the administrator today.

- Scaling out the storage.

These systems are typically based on a fully distributed file system running on the storage box. They are configured to give full consistency. Example solutions in this space are EMC Isilon and Red Hat Ceph. Since these solutions are a fully distributed file system, they are typically doing a Multi RW cache coherency algorithm.

- Host side scale-out

These systems typically use host side resources like flash and memory to scale-out the storage performance with the number of hosts in the system. Example solutions in this space are PernixData FVP and various hyper-converged solutions. The PernixData solution is unique in this respect because it is one of the few solutions that leverages a Single-RW cache coherency algorithm allowing it to scale performance linearly with the number of hosts in your cluster. The hyper-converged solutions in the virtualized space that claim to make the datacenter storage behave like a public cloud are typically bringing in a Multi-RW cache coherency approach. As mentioned above, a Multi-RW solution will solve the problem but at a cost of complexity and performance for a problem that does not truly need a Multi-RW solution.

Conclusion

Virtualization does not have to be synonymous with storage performance. Scale-out storage systems can provide a compelling solution for storage performance issues in virtualized environments. Administrators who are bearing the brunt of the user frustration can leverage their understanding of the virtualization cache coherency characteristic, namely that it is a Single-RW cache coherency problem, to choose the right solution for their environment.