Closing the Cyber-Physical Gap in Data Center Security

Data center security is typically divided into two core domains: digital defenses such as firewalls, identity systems, and network monitoring, and physical protections that include surveillance systems, access controls, and on-site security personnel.

Between those domains lies a third operational layer – power, cooling, and building management systems – that are typically handled by facilities teams, not security teams, and are increasingly exposed to cyberattack as a result.

As operational infrastructure becomes more connected, the need to monitor its actual behavior – at the physical level – has become urgent. This article explores why Level 0 (of the Purdue Model) visibility is now essential for data center resilience, and what recent regulation and real-world incidents reveal about the evolving risk.

A Likely Cyber-Physical Incident Hidden in Plain Sight

In August 2023, Microsoft’s Australia East data center experienced a major cooling failure that led to extended outages across Azure and M365 services. The disruption began with a reported voltage dip on the grid, which caused five of seven chillers in one of the data halls to shut down. Only one backup unit restarted, forcing Microsoft to take workloads offline to prevent hardware damage.

But the failure wasn’t mechanical. Post-incident analysis showed that the chillers had not received the control signals required to restart the circulation pumps. In facilities with automated recovery logic and redundant infrastructure, that kind of failure across multiple systems is statistically improbable (at best). The simultaneous loss of multiple independent systems points not to a power event, but to a disruption in control logic.

The technical pattern closely resembles what a process-layer cyberattack would look like. If an attacker had accessed the building management system or an engineering interface and modified how the chillers respond to voltage fluctuations, the result would have been identical. And because those control changes fall entirely outside traditional network and log monitoring, the disruption would not trigger a security alert.

The incident was treated strictly as an operational malfunction. No cybersecurity investigation was disclosed. But its behavior (a sudden, coordinated failure of automated control without hardware damage) bears all the characteristics of a cyber-physical compromise at the process layer.

The conclusion? It’s a risk that data center operators can no longer afford to ignore. And increasingly, neither can regulators.

The Regulatory Bar Has Already Been Raised

Recent regulations across Europe and the UK are making it clear: these systems now fall within the scope of national cybersecurity policy and compliance requirements. Governments aren’t waiting for a confirmed attack on a data center – they’re moving ahead with policies that assume one is coming.

Across regions, the scope of cybersecurity regulation is expanding. These requirements now extend beyond IT networks to include the Operational Technologies that control power, cooling, and environmental systems. While these systems haven’t traditionally been a focus of cybersecurity programs, they are essential to uptime – and increasingly central to regulatory compliance.

In the European Union, the NIS2 Directive (which took effect in late 2024) explicitly applies to data center and cloud providers. It requires operators to implement real-time monitoring, anomaly detection, and secure control across both IT and OT environments. That includes field-level devices often overlooked by traditional cybersecurity programs.

One area of particular concern is the process layer (Level 0) of the Purdue Model. This layer includes field-level devices (such as sensors and actuators) that directly monitor and influence real-world operations. These components often operate without authentication, logging, or audit controls. That makes them vulnerable to misuse or tampering that can disrupt operations without triggering a single alert at the network level.

In the United Kingdom, the 2024 designation of data centers as Critical National Infrastructure established that disruptions to systems like chillers, pumps, and building automation are no longer just a facilities issue. They are now treated as potential threats to national cybersecurity.

In the United States, there is no single law requiring process-layer monitoring in private data centers. But regulatory pressure is building on several fronts:

- The SEC’s 2023 cybersecurity disclosure rule mandates that public companies report material cyber incidents (including physical disruptions) within four business days.

- The White House’s 2025 National Cybersecurity Strategy specifically calls for stronger protection of HVAC, industrial control systems, and building management platforms.

- Customers in heavily regulated sectors (from banking to healthcare) are increasingly demanding that service providers demonstrate visibility into cyber-physical risks.

What ties all of this together is a shift in expectations. Resilience is no longer defined by having a backup generator or failover protocol. It now means being able to detect and diagnose disruptions that may begin in software but manifest in physical infrastructure. That kind of visibility doesn’t come from network tools. It starts at Level 0 – where operational systems actually move, pump, cool, and power

From IT Access to Physical Consequence: A Plausible Attack Chain

While Microsoft has not disclosed a cybersecurity investigation, the nature of the failure in its Australia East data center is difficult to explain as a simple technical glitch. Redundant chiller systems are designed specifically to recover from voltage events. The odds that five out of seven units failed to restart simultaneously (without any hardware damage) are statistically low.

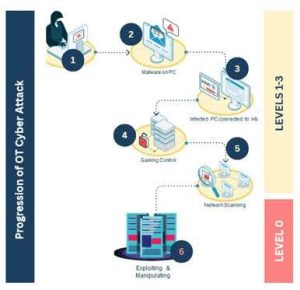

We don’t know exactly what happened in Sydney. But we do know how a cyberattack could produce the same outcome – without leaving any obvious forensic trail. The diagram below outlines one such progression, based on common techniques used in modern OT intrusions.

Diagram 1: From Remote Access to Physical Manipulation – An OT Attack Path

The sequence above shows how an attacker could move from initial network access to manipulating physical infrastructure, without triggering alarms or damaging equipment.

- Initial compromise: A phishing email or third-party credential leak allows access to a business-side workstation.

- Malware deployment: Remote access tools or automation scripts are installed on the device.

- Pivot to OT network: The compromised PC is connected to a Human-Machine Interface (HMI) or engineering workstation used for building controls.

- Privilege escalation: The attacker gains deeper access to the automation servers managing HVAC and power logic.

- Network discovery: Internal scanning reveals the structure of cooling, electrical, and backup systems.

- Control logic manipulation: The attacker modifies response conditions or overrides failover logic, suppressing equipment behavior without raising alarms.

The Visibility Gap That Enables Physical Compromise

In this scenario, everything appears normal at the software level. SCADA logs still record that commands were issued. HMIs show no faults. Even redundancy systems may appear “ready” – but they are never triggered.

The manipulation has occurred deep inside the control logic, in the rules that govern how and when devices respond. Without process-layer monitoring, the facility has no way to verify whether a pump started, a motor drew current, or a breaker closed. No alerts are triggered, and no tickets are raised – until workloads are taken offline due to rising heat.

This is the critical blind spot in most data center cybersecurity programs: they rely on tools that operate at the upper levels of the Purdue Model – network traffic analyzers, controller logs, and SCADA systems – without direct validation from the physical process layer. But if attackers target the operational logic itself rather than system availability, those tools may never detect it.

Level 0 monitoring – based on out-of-band electrical and signal measurements -can detect these inconsistencies in real time. It doesn’t need to “trust” the control system. It listens directly to the physical world.

How Process-Oriented Monitoring Works

Process-oriented cybersecurity, also referred to as Level 0 monitoring, takes a fundamentally different approach from traditional tools. Instead of relying on logs, controller status, or network traffic, it directly observes the electrical signals and physical responses of infrastructure equipment.

To be effective and not subject to manipulation, this monitoring is out-of-band, meaning it operates independently from the control system. It doesn’t rely on SCADA data. Instead, it taps into raw signals like:

- Motor current and voltage

- Actuator position or torque

- Flow rate, pressure, and temperature changes

Because these signals are captured from the physical side of the system, they can’t be spoofed or hidden by compromised logic or a hacked HMI. If a command is issued but the motor never draws current, or a pump fails to create flow, the Level 0 system registers that in real time.

In short, process-oriented monitoring doesn’t rely on what the system reports—it confirms what truly happened in the physical environment.

That’s what makes it the missing link in cyber-physical resilience.

Conclusion: From Infrastructure to Insight

Whether or not the Microsoft Australia incident was a cyberattack, it shows the exact conditions that make one possible (and invisible until it’s too late):

- A highly automated environment

- A multi-system failure with no equipment damage

- A recovery logic breakdown across redundant assets

- And no process-layer visibility to confirm or refute what actually happened

These failures weren’t caused by faulty hardware; they were likely caused by a disruption in control logic. And because there was no independent visibility into whether critical infrastructure actually responded to commands, the issue went undetected. Traditional tools showed that systems were online and commands were issued. But no one could see what was really happening on the ground.

That’s why Level 0 monitoring is no longer a nice-to-have. It provides direct evidence of physical behavior – independent of the control system. And in high-stakes (and high target) environments like data centers, that’s the only way to know when critical systems are being quietly turned against you.

# # #

About the Author

Michael Schumacher is an exceptional Sales Leader with 25 years of success in high-end solution sales, consistently driving revenue growth and market expansion in SaaS, IT Security, and enterprise solutions. “Schu” – as he is known in the industry – has a proven track record of achievement, including multiple President’s Club awards as both an individual contributor and North America sales leader. He is an expert in go-to-market strategy, customer relationship management, and building high-performing teams. Schu has spent his adult life as a coach, mentor, and student, with a Bachelor of Science in Biology from Hampden-Sydney College, and he is the proud father of four.